The goal of this final class project (EC418 Intro to Reinforcement Learning) is to create an agent capable of simulating Mario Kart (Pytux) by implementing reinforcement learning algorithms and training neural networks to predict aim points from the frames and improving its performance to minimize completion times. Potential enhancements included refining the controller, using better planning methods, incorporating reinforcement learning, or leveraging additional features like obstacle prediction and multiple aim points.

I began with reinforcement learning algorithms, beginning with Q-Learning:

This Q-learning implementation uses a table-based approach to map states (rounded aim point and velocity) to actions (e.g., steering, braking). The reward function combines progress (aim point alignment), velocity matching to a target, and penalties for collisions, ensuring the agent learns efficient and safe driving. Exploration and exploitation are balanced using an epsilon-greedy strategy with decay, and Q-values are updated with a weighted combination of immediate reward and future state value. Excessive rollouts are required; though agent still struggles to find convergence.

Q-Learning performed poorly. So next, I decided to implement Temporal Difference Learning with Linear Approximation:

Reintroducing Q-Learning with Linear Approximation, it produced greater results after 50+ rollouts. However, the agent struggled to learn a optimal policy due to the high-dimensional state space and the poor choice of the features consisting of velocity and acceleration.

Following this algorithm, I used features like aim point and velocity to extract states, which are evaluated through weight vectors (\( \theta \)) for actions such as steering, accelerating, and braking. The weights are updated using temporal difference learning, and the agent balances exploration and exploitation with an epsilon-greedy policy, while the reward function encourages smooth driving, progress, and speed maintenance.

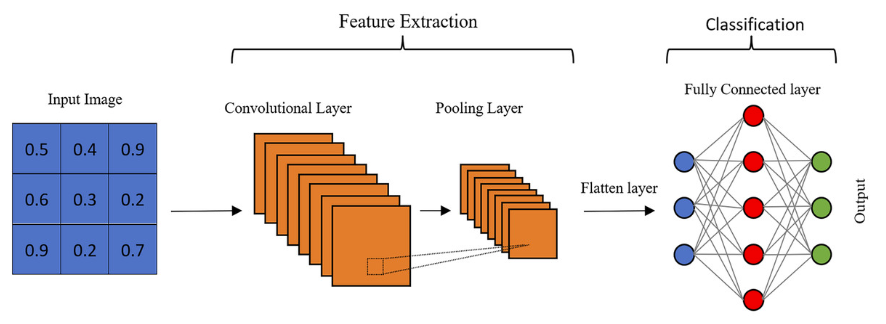

Deep Q-learning still struggles producing similar results to TD-Learning with Linear Approximation, but still better because it uses a neural network to approximate the \( Q \)-function, enabling it to handle complex, high-dimensional state-action spaces efficiently.

In theory, leveraging gradient descent, it minimizes the loss between the predicted \( Q \)-value and the target \( y_{\text{target}} = r + \gamma \max_{a'} Q_\theta(s', a') \), adjusting the parameters \( \theta \) in the direction of the gradient to improve the approximation iteratively. This combination allows the model to generalize well across states, making it more effective than traditional Q-learning.

In modifying the planner’s CNN parameters, it resulted that smaller kernel sizes improved sharp turn handling, which significantly lowered the number of frames. It turned out that using ReLu activation functions led to greater consistency but the agent still struggled on very sudden turns on certain tracks. Adding batch normalization and dropout resolved shortcut attempts but did not really contribute to reducing the completion times, time steps resulted similar to TD-Learning. In all, these adjustments significantly improved the agent's performance whereas Q-Learning failed terribly even after 1000+ rollouts, requiring a higher max frame cap as well.

Policy gradient methods optimize the policy directly by adjusting the parameters \(\theta\) in the direction of the gradient of expected reward. Another reinforcement learning algorithm

that I would like to try is Actor-Critic which uses two neural networks:

Using two frameworks, the actor network maps the states (e.g., kart velocity, track curvature, steer angle) to actions (e.g., steering, acceleration, drift), while the critic network evaluates the actor's value of each state. Thus, the actor learns and optimizes its policy \( \pi_{\theta}(s, a) \) via policy gradients, and the critic minimizes the temporal difference error between the predicted value and the target value.

For each neural network, they can share similar features respectively, although TD-Learning with Linear Approximation and Deep Q-Learning have proven to perform terribly under the current selected features. So another possibility is to use a combination of CNN, which has shown to be more effective, and try to extract features such as track geometry and subjective turns through labeling.

Finally, I experimented with a general PID controller which manages the steering and velocity of the kart through a feedback loop mechanism:

For this specific application, the steering PID controller minimizes the error between the kart's aim point and the track center, while the speed PID controller adjusts acceleration or braking to maintain a target velocity. The control() function uses these controllers to compute steering, acceleration, braking, and drifting actions, with mechanisms to reset integrals and rescue the kart when it is stuck or off-track. The performance of the controller significantly outperformed all the reinforcement learning algorithms I've tried with the features I chose, and managed to complete the fewest number of frames with minor struggles on sharp continous turns at times.

• Poor show of results with selected features

• Requiring excessively long training times

• Limited prior knowledge of neural networks

• Incompatibility with environment and anaconda packages

• Various reinforcement learning algorithms and their implementations

• More practice with python including libraries like Tensorflow and Pytorch

• Introduction to neural networks and their applications