Overview

The goal of this EC418 final project was to build an agent capable of driving in SuperTuxKart (PyTux) by predicting aim points from frames and selecting actions to reduce completion time. I explored multiple RL approaches, then compared them against a strong classical baseline (PID).

What I built

A training + evaluation pipeline with reward shaping, feature extraction, rollout tracking, and multiple learning/control methods under the same environment interface.

What I measured

Completion frames/time, stability in sharp turns, collision behavior, and convergence reliability under limited state features.

Reinforcement Learning

I began with tabular Q-Learning and then moved to linear function approximation due to the large state space.

\[ Q(s,a) \leftarrow Q(s,a) + \alpha \left[ r + \gamma \max_{a'} Q(s',a') - Q(s,a) \right] \]

The agent used an epsilon-greedy exploration strategy with decay. Rewards were shaped to encourage progress (aim alignment), safe driving, and target speed, while penalizing collisions and off-track behavior. Tabular methods struggled with convergence and required excessive rollouts.

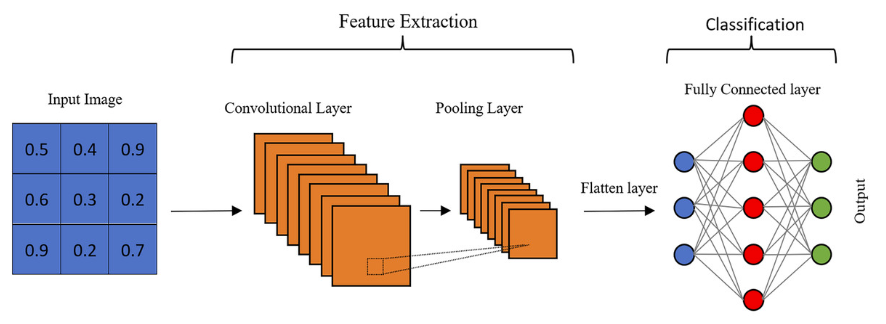

Neural Networks

Deep Q-Learning improved generalization by approximating the Q-function with a network, but stability remained sensitive to features and track geometry.

\[ \theta_{t+1} = \theta_t + \alpha_t \left( r + \gamma \max_{a'} Q_{\theta_t}(s', a') - Q_{\theta_t}(s, a) \right) \nabla_{\theta} Q_{\theta_t}(s, a) \]

Smaller kernels improved sharp turn handling. ReLU increased consistency, while batch norm + dropout prevented shortcut attempts but didn’t dramatically reduce completion times compared to TD baselines.

PID Controller

A classical PID baseline outperformed the RL approaches under the selected features, achieving smooth steering and reliable progress through turns.

\[ u(t) = K_p e(t) + K_i \int_{0}^{t} e(\tau) d\tau + K_d \frac{d}{dt} e(t) \]

Steering PID minimized lateral error (aim point vs. track center), and speed PID regulated velocity. Rescue logic handled stuck/off-track states while preventing integrator windup.

Why PID won (in this setup)

With limited and noisy features, PID produced consistent control without requiring long training. RL needed richer observations/features to reliably learn corner cases.

Results

PID achieved the most reliable completions with the fewest frames under the feature set used. RL approaches improved gradually but suffered from convergence instability and sensitivity to track conditions.

Best performing

PID Controller — robust, consistent, minimal tuning overhead.

Most promising direction

Vision + richer features (track geometry, curvature cues, better state encoding).

Reflection

Challenges

• High training time / many rollouts

• Feature selection limited performance

• Neural net tuning + environment dependency issues

What I learned

• RL algorithm tradeoffs in real systems

• Reward shaping + exploration design

• CNN tuning effects on behavior

by Justin Yu

by Justin Yu